Response to Tyler Cowen on AI risk

AGI will effectively be the most powerful weapon man has ever created. Neither “lockdown forever” nor “let ‘er rip” is a productive response; we can chart a smarter path.

During covid, there were two loud camps:

- Lockdown forever

- Let ‘er rip

Both were untenable. Thankfully, a handful of smart people in influential positions helped chart another option:

- a) Maximally accelerate vaccine production + b) masks/voluntary social distancing in the meantime

I see a similar situation on AI right now. There are two loud camps:

- Shut it all down (Eliezer)

- Just go for it

Both are untenable. We can chart a better path:

- a) Operation Warp Speed for AGI alignment + b) AI lab self-regulation and independent monitoring for safety (such as via ARC evals).

Recently, Tyler Cowen wrote a post on AI and AI risk. It is worth reading (and worth reading with an open mind). Tyler makes many interesting and useful points—but, surprisingly, Tyler seems to advocate for a path more like 2.

We had a great debate at the recent Emergent Ventures conference. Here’s my response to him.[1] In brief:

- Tyler asks for historical reasoning, rather than “nine-part arguments.” I say, AGI will effectively be the most powerful weapon man has ever created. Applying historical reasoning, that has two implications. We must beat China. And we must have reliable command and control over this immensely powerful weapon—but we’re not currently technically on track to be able to do this.

- Tyler presents the main question as “ongoing stasis” vs. “take the plunge.” I say, we’re taking the plunge alright; let’s focus on the smart things we can do. If we get our act together, we can actually solve this problem!

- Tyler claims predicting AI risk is impossible. I say, he said that about predicting recent AI progress too, and he was wrong. The people who did correctly predict recent AI progress are the same people concerned about (lack of) progress in AI alignment/control—and that’s because they are the people actually building these systems. Forget LessWrong, Tyler! It’s all the AI lab CEOs who are worried about this.

Some core propositions on AI

I agree with Tyler that AI will be transformative. Our bubble “outside of history” might be coming to an end.

At the very least, AI will be as big of a deal as the internet. But I also think there’s a large chance it’s much a bigger deal yet, more like the industrial revolution. AGI could lead to dramatically faster economic growth—imagine every R&D effort now having 100 million of the smartest researchers to help (just in the form of human-level AGIs). This is the scenario I will focus on here, because I believe it is by far the most consequential.[2]

I agree with Tyler that while this will cause great upheavals, the good will likely considerably outweigh the bad. Past technological progress has been amazing for human wellbeing; so too will the new vistas opened by AI.[3]

But I also think that AGI will effectively be the most powerful weapon man has ever created. (Again, imagine if you had 100 million of the world's smartest researchers to help you build a bioweapon—that’s what AGIs will be able to do.) Think nukes, but 10x.[4] Applying historical reasoning, that has two big implications.

-

First, the United States must beat China on AI. Too few “AGI commentators” come out clearly on this. The totalitarian threat is real; twice in the last century did the free world barely manage to beat it back.[5] If AGI can enable dramatically faster technological progress, whoever dominates in AI may well be able to dominate the world. The AI chip export controls are good, and the US currently has a healthy lead in AI. But things can change quickly,[6] and history is live again. (Don’t think China isn't paying attention.)[7] [8]

-

Second, we must prevent accidents—and accidental apocalypse. There are too many harrowing stories from the early Cold War (Daniel Ellsberg’s book is an amazing read on this)—think “stray geese nearly triggered nuclear early warning systems, and consequently launch of US nukes; this could have easily caused nuclear armageddon.” If we are to build this immensely powerful weapon, we must be sure we can reliably control it.

I agree with Tyler that there is tremendous uncertainty, and I wouldn’t put much weight on any specific scenario. But just like I want to be really sure that there is reliable command and control for nukes, I want to be really sure we have reliable command and control for AGI systems. As with nukes—much more so than nukes—failure here could be catastrophic.

That is the alignment problem, as I understand it: ensuring AI systems reliably operate in accordance with their human operator’s command. What I would emphasize to Tyler is that this is very much an unsolved technical problem. (I discuss this in a recent post, “Nobody’s on the ball on AGI alignment”.) We don’t have a convincing technical plan for reliably controlling AGI systems, especially as they become superhuman.

Imagine if I told you, “we’re building nukes, but the people building them aren’t feeling great about our technical methods to target and control the nukes; they might all be launched unintentionally and blow up much of the world.” (I think it would be very fair for people to be up in arms about that!)

No sci-fi or “nine-part arguments” required; just a healthy respect for how close we came to inadvertent armageddon in the Cold War. (I’ll say some more speculative things in a footnote; look away, Tyler![9])

The pro-progress policy response

The popular discourse on covid policy often suffered from a severe poverty of imagination. People argued endlessly over a one-dimensional tradeoff: “how much should we lock down?” But better things were possible—expand the production possibility frontier! Rapidly developing vaccines and therapeutics, encouraging masks, and so on alleviated the tradeoff; we could both avoid lockdowns and prevent covid. That was the productive, pro-progress response to the pandemic.

I see some similar failures in the current AI discourse. We’ve got loud voices in favor of shutting it all down, and we’ve got loud voices for pushing ahead with reckless abandon. We can expand the production possibility frontier, folks! Here’s what I think we should do right now:

- The state of AI alignment research right now is embarrassing. There is way too little work on scalable alignment, and the research that is happening is very much not on track to solve this problem. But we can do better! Let’s do Operation Warp Speed for scalable alignment! Let’s get the best ML researchers in the world working on this; let’s have billion-dollar, concerted, ambitious efforts that match the gravity of the problem. Innovation can save the day. Ensuring reliable command and control for the most advanced weapons system man has ever created is a national priority. (And we don’t have to rely on dysfunctional government here—this can be a large-scale private effort. People are really cheems about how it’s impossible to do more here; more forthcoming.)

- We can be smart about monitoring the risk. I hear blunt cries for government regulation—but remember that it’s Lina Khan-types who would be writing these regulations right now. Instead, let’s get the main AI labs to agree to independent, intelligent monitoring (working with e.g. ARC evals). Before deploying a powerful new model, have auditors measure and assess the model. If there’s no catastrophic risk, as now—labs should be free to charge ahead. But labs should commit that if auditors find that a model has the capabilities for catastrophic damage (such as being able to build bioweapons or to autonomously “survive and spread”[10]) and the model doesn’t pass safety checks, they won’t deploy the model (until they've done the requisite technical work to pass the checks). Additional independent auditing could check things like infosec (at some point, model weights will become a target the same way nuclear secrets are—the risk of espionage is radically underrated). Think of this as the analog to masks / voluntary social distancing while we race to develop vaccines.[11]

As with covid, that’s the real pro-progress response to this problem. Much better things are possible; we just have to actually try. Endless online flamewars over “lockdown forever” vs. “let ‘er rip” are, well, much less productive.

During covid, “lockdown forever” and “let ‘er rip” weren’t just object-level bad policies; but whatever their appeal to some, both ignored basic constraints of political economy and societal dynamics. Tyler at the time was clear-eyed about this for covid.[12] These positions are similarly unrealistic for AI right now.

As discussed by Tyler and various others, pause and “lockdown forever” proposals on AI have been pretty naive so far. Even putting aside the critical China consideration, the “horse is out of the barn” on stopping AI progress. Zero nukes wouldn’t be a stable equilibrium; similarly, this paper has interesting historical case studies (such as post-WWI arms limitations and the Washington Naval Treaty) where disarmament in similarly dynamic situations destabilized, rather than stabilized.[13]

By the way, Sydney/Bing chat might have been the best thing that ever happened to AI safety. It is people seeing the power of these models that will catalyze the investments necessary. And to turn back to China—if you’re worried about AI risk, do you really believe that international agreements to halt progress are realistic? All paths to safe AGI flow through American hegemony.

There may be some critical future “crunch time” moment where a temporary pause is necessary, feasible, productive; right now, the 6 month pause wouldn’t accomplish much.[14]

Similarly, even if you are tempted by the “let ‘er rip” response (screw the doomers, you say)—society cares about safety, and society is increasingly waking up to AGI and AGI risk. We will probably see further, much scarier demos and wakeup calls yet. People will throw a lot of sand into the gears if your answer to “will your superhuman AGI system go haywire?” is merely “probably not?”. (I discuss this in a recent post.) If you want to build AGI (including to beat China), and you don’t address this problem in a better way, you’ll get some much worse cludge down the road.

Prediction, agnosticism, and fatalism

A year ago, Holden Karnofsky was saying “AGI could be soon”; Tyler’s response was that such AI forecasts “[make] my head hurt” and that ~we could never predict such a thing. (Though, to be fair, Tyler’s 2013 book emphasizes the future import of AI broadly.)

Tyler was wrong—and that’s totally fine, very many underrated AI progress until recently (I’ve updated in the last few years too). He’s changed his mind in his recent post with grace.

However, this does make me a bit more skeptical of Tyler’s recent stand on existential risk from AI—that no one can predict such a thing, and that the only response is radical agnosticism. I’ve heard that before.

Tyler loves to bash LessWrongers’ naivety. Forget about LessWrong, Tyler! The serious people worried about AI risk are the same people who correctly predicted rapid AI progress—and that’s because they’re the people who are actually building these systems.

One difference between worry about AI and worry about other kinds of technologies (e.g. nuclear power, vaccines) is that people who understand it well worry more, on average, than people who don't. That difference is worth paying attention to.

— Paul Graham (@paulg) April 1, 2023

Sam Altman and Ilya Sutskever (father of deep learning, Chief Scientist at OpenAI) say that scalable alignment is a technical problem whose difficulty we really shouldn’t underrate and we don’t have good answers for; they take AI x-risk very seriously. The main people who built GPT-3, and authored the seminal scaling laws paper[15]—Dario Amodei, Jared Kaplan, Tom Brown, Sam McCandlish, etc.—are literally the people who left OpenAI to found Anthropic (another AI lab) because they didn’t think OpenAI was doing enough about safety/alignment at the time. There are many more.[16]

These people correctly recognized the state of AI progress in the past,[17] and now they’re correctly recognizing the state of AI alignment progress. Right now, we don’t know how to reliably control AGI systems, and we’re not currently on track to solve this technical problem. That is my core proposition to you, Tyler, not some specific scifi scenario or nine-part argument. If AGI is going to be a weapon more powerful than nukes, I think this is a problem worth taking very, very seriously. (But it’s also a solvable problem, if we get our act together.)

A vivid memory I have of covid is how quickly people went from denial to fatalism. On ~March 8, 2020, the German health minister said, “We don’t need to do anything—covid isn’t something to worry about.” On ~March 10, 2020, the German health minister’s new position was, “We don’t need to do anything—covid is inevitable and there’s nothing we can do about it.” Yes, covid was in some sense inevitable, but there were plenty of smart things to do about it.[18]

The choice on AI isn’t “ongoing stasis” or “take the plunge.” We’re taking the plunge alright. If you thought covid was wild, this will be much wilder yet. The question is what are the smart things to do about it. I’ve made some proposals—what are yours, Tyler? There’s a lot of idle talk all around; I'd like much more “rolling up our sleeves to solve this problem.”

Thanks to Carl Shulman, Nick Whitaker, and Holden Karnofsky for comments on a draft.

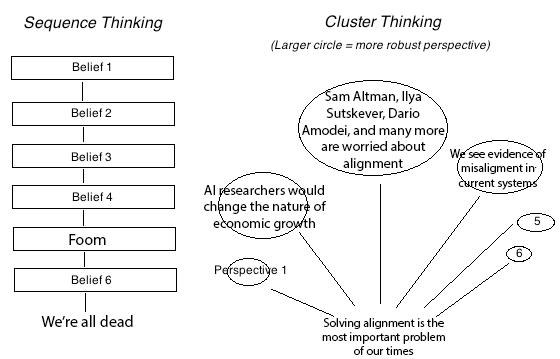

Appendix: re “nine-part arguments,” Nick Whitaker recommends you read Holden on cluster thinking vs. sequence thinking

- Aiming for consilience and persuasion here, not LessWrong karma! Other responses: Scott Alexander, Zvi Mowshowitz. Tyler complained that these didn’t apply historical analysis or address the China argument, among other things.

- A lot of the discourse seems too focused on absolutes (“AGI will definitely happen”/”AGI definitely won't happen”/”AI risk is 100%”/”AI risk is 0%”). I like probability distributions. (You can still care about something, even if you don't think it is certain!)

- But I will say that I don’t really think this is much of a knock-down response to any particular AI policy response. I’m optimistic because I am optimistic about our ability to deal with the challenges competently, if we get our act together, not because we won’t need to do anything at all.

- Or think “Europeans easily conquering the Americas because of better technology.”

- I grew up in Berlin, Germany, a city steeped in this history. One of my parents comes from the former East Germany, the other from the former West Germany; they met shortly after the Wall fell. This is very visceral to me!

- 8 years ago everyone thought Deepmind was clearly going to be first to build AGI, and that OpenAI was but a sideshow… (reported to me by people around then) See also this draft report by GovAI on Chinese AI capabilities, h/t Jordan Schneider.

- And do note that China is preparing to invade Taiwan (or at least start a Cuban Missile Crisis style standoff); the natsec debate now is more about when rather than if. (Tanner Greer has some great writing on this and related issues.)

- There’s a flipside too though—if I were the CCP, I would be pretty worried about AI being a threat to the Party’s grip on power.

-

Here's probably a closer analogy, though farther from the historical reasoning. Imagine if I told you: "we're building nukes, but we're putting a bunch of aliens in charge of our nukes. The aliens did well on our 'nuke controller' work test, but we don't really have any reason to be confident these aliens will actually reliably follow our commands."

That roughly describes what we're doing with AGI. It makes me feel pretty nervous.

I would put the chance of existential catastrophe from AI at ~5% in the next 20 years. I'm pretty optimistic overall that we can solve this command and control problem. But, a 5% chance of human extinction or similar, is, well... still scary high!

Tyler objects to the reasoning of many AI safety advocates, alleging it doesn't doesn't reason historically. I say, Tyler's conception of history considers only too recent of a past. Crazy things, like the industrial revolution, do happen! And homo sapiens wiping out the neanderthals and other hominids is part of history too.

Tyler doesn't like LessWrong; here are some better links. Here's a Jacob Steinhardt (ML prof at Berkeley) blog post about emergent deception; here's a demo of reward hacking; some Anthropic evals; here's Richard Ngo (OpenAI) on the alignment problem from a deep learning perspective; Joe Carlsmith on power-seeking AI; here's Erik Hoel convincing Russ Roberts that AI xrisk is real on Econtalk; here are some good Holden blog posts: 1, 2, 3, 4, 5.

- I.e., the AI system is able to autonomously replicate itself and survive on the internet, resistant to human attempts to shut it down.

-

Note that these are the proposals that I think make sense right now. Things might change.

The case/path to government regulation (rather than self-regulation) that seems most plausible to me at the moment is that we establish competent independent self-regulation, but maybe there is e.g. a lab that’s irresponsibly holding out on doing basic measures like this (and we get scary demos of real catastrophic risk), and then the successful self-regulation procedures can be adopted by the government in some way.

- For example, Tyler consistently argued that "let 'er rip" wasn't a realistic libertarian response to the pandemic. There was a libertarian schism, and Tyler was on the right side---state capacity libertarianism.

-

The key question for the stability of an arms control equilibrium is whether breakout is easy for a rogue actor.

1980s arms control during the Cold War reduced nuclear weapons substantially, but targeted a stable equilibrium. The US and the Soviet Union still had 1000s of nuclear weapons. MAD was assured, even if one of the actors tried a crash program to build more nukes; and a rogue nation could try to build some nukes of their own, but not fundamentally threaten the US or Soviet Union with overmatch.

However, when disarmament is to very low levels of weapons, or is amidst rapid technological change, the equilibrium is unstable. A rogue actor can easily start a crash program and threaten to totally overmatch the other players.

I claim this is the case with AI. Rapid algorithmic progress and AI chip improvements are making it dramatically easier every year to get powerful models; if a bunch of the current main players halted, it would soon be far too easy for a rogue actor to break out. (And that's putting aside the potentially critical consideration of eventual feedback loops, where AIs help engineer even-more-powerful AI models.) It's very hard to have a stable disarmament regime with AI right now.

-

Matthew Barnett's recent thread is good on this.

Even if, by some miracle, everyone agreed to a 6 month pause, a 6 months pause right now probably buys us much less than a 6 month slowdown---there'd be a capabilities overhang, and people would just use the time to set up their computers and algorithms for a bigger training run after the pause. And as I've discussed, AI alignment research isn't going particularly well at the moment---so extra time right now doesn't help that much---the key thing is scaling up much better efforts at alignment.

I also worry about "crying wolf." Models right now aren't actually catastrophically risky (yet); there's no imminent threat being headed off. And I think a lot of our slowdown levers are more "one-shot" (can't stop AGI forever, for example because we only have so much of a buffer ahead of China or other rogue actors, but we can buy ourselves a bit of a time one time).

I do think there could be some moment in the future---maybe we get an AI-equivalent of 9/11, or ARC evals-type efforts find credible evidence of an imminent catastrophic threat---where a pause is warranted and feasible. (And where we could use a pause much more usefully, because we've scaled up productive alignment research, and we have more powerful models to do engineering work with.)

- Always read Gwern.

- Geoff Hinton (godfather of deep learning) says that we don't know how to control AGI and is pretty concerned about risks. Demis is worried. Yoshua Bengio (another godfather of deep learning) signed the pause letter. In a survey of ML researchers, the median researcher put the chance of human extinction or similarly bad outcome at 5-10%.

- And before you ask, “did they actually bet on the market”—yes, many I know did, e.g. buy NVDA when it was many multiples below the current price.

- Tyler himself advocated for many smart policies, and made many useful things happen himself via Fast Grants!

FOR OUR POSTERITY Newsletter

Join the newsletter to receive the latest updates in your inbox.